The ExtraSensory App

A mobile application for behavioral context recognition and data collection in the wild

| Jump to: | Dataset description | Download | Applications | Relevant papers |

| Jump to: | Dataset description | Download | Applications | Relevant papers |

|

This Android mobile app was developed by Yonatan Vaizman,

based on the iPhone version, which was developed by Yonatan Vaizman and Katherine Ellis. Department of Electrical and Computer Engineering, University of California, San Diego. |

|

|

An earlier version of the ExtraSensory App was first used to collect data in-the-wild from 26 Android users for the ExtraSensory Dataset. The revised version of the ExtraSensory App can serve two purposes:

|

Go to the ExtraSensory Dataset |

For a full description of the app and how to use it (as a user and as a researcher), read the documentation at ExtraSensory App guide.

Here is a brief description:

When the ExtraSensory App runs in the background of the phone, it is scheduled to record a 20-second window of sensor-measurements from the phone (e.g. from accelerometer, magnetometer, audio, location) as well as other devices (the current code interfaces with a Pebble watch, to collect acceleration from the wrist).

These measurements are sent to a server, which responds with inferred prediction of the user's context, in the form of probabilities assigned to different context-labels (e.g. walking, shower, drive - I'm the driver, at home).

The sensor-measurements are stored at the server (in case data-collection is the purpose of using the app).

In addition, the app's interface is flexible and has many mechanisms to allow the user to report labels describing their own behavioral context, either on their past, near-past or near-future:

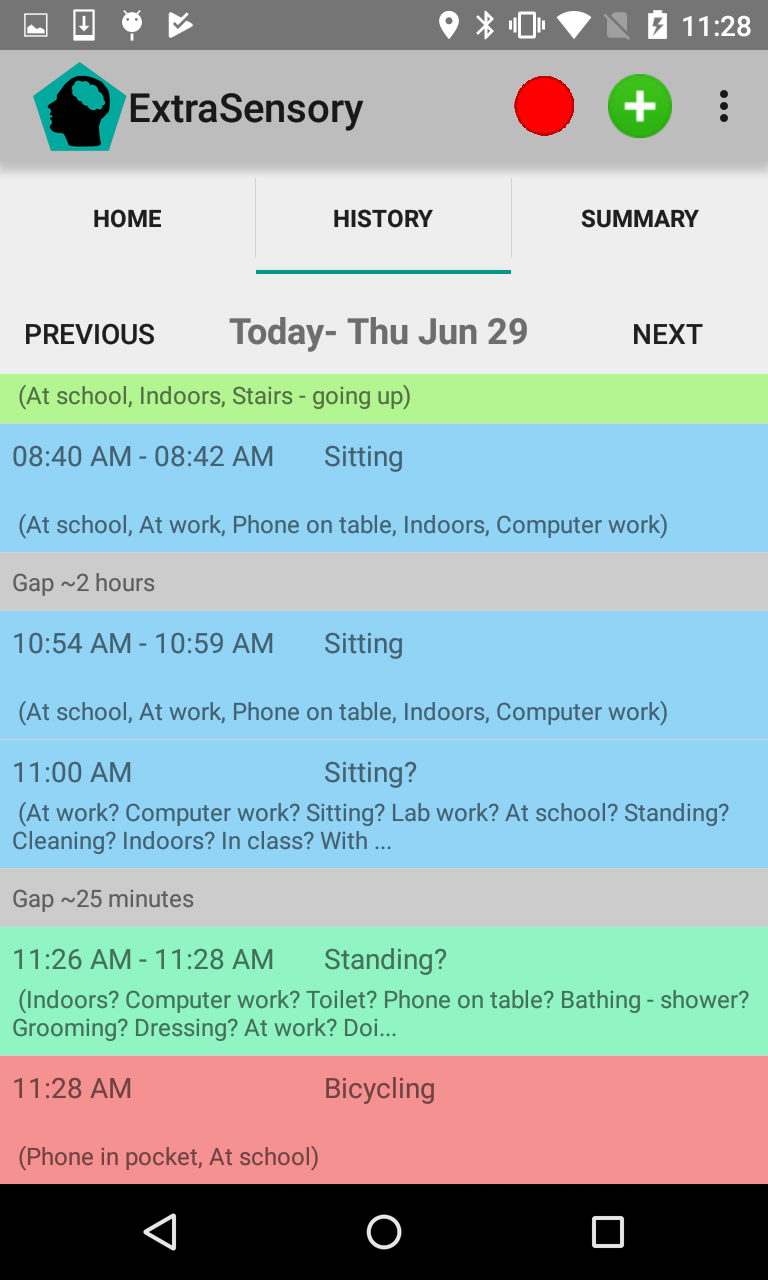

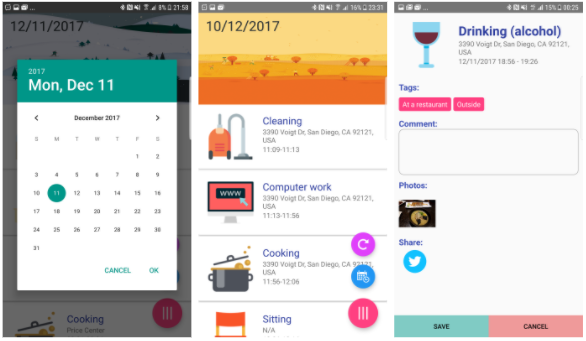

| History view. Designed as a daily journal where every item is a chapter of time where the context was the same. Real-time predictions from the server are sent back to the phone and appear with question marks. By clicking on an item in the history the user can provide their actual context labels by selecting from menus (including multiple relevant labels together). The user can also easily merge consecutive history items to a longer time-period when the context was constant, or split an item in case the context changed during its period of time. The user can view the daily journal of previous days, but can only edit labels for today and yesterday. |

|

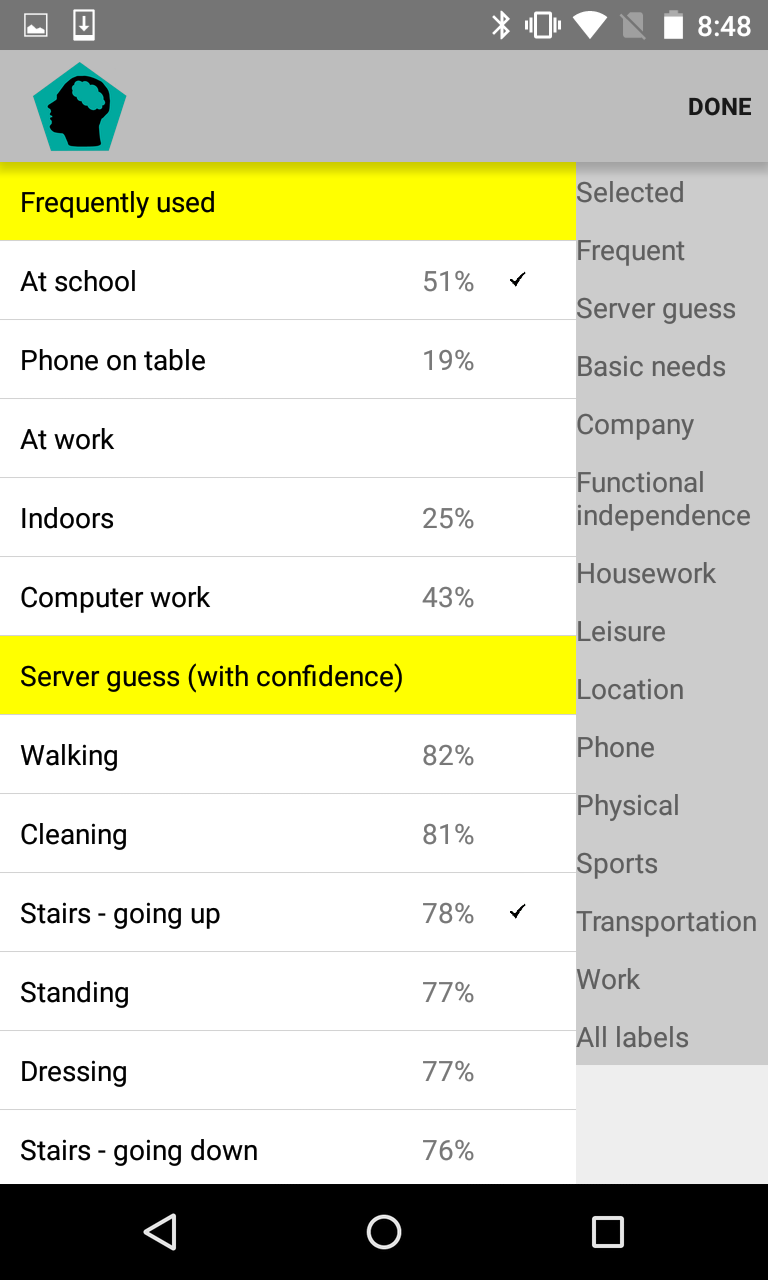

| Label selection view. The interface to select the so called 'secondary activity' has a large menu of over 100 context labels. The user has the option to select multiple labels simultaneously. In order to easily and quickly find the relevant labels, the menu is equipped with a side-bar index. The user can find a relevant label in the appropriate index-topic(s), e.g. 'Skateboarding' can be found under 'Sports' and under 'Transportation'. The 'frequent' index-topic is a convenient link to show the user their own personalized list of frequently-used labels. The 'server guess' topic shows the server-predicted labels from more probable to less probable, with the probability that the server assigned each label. |

|

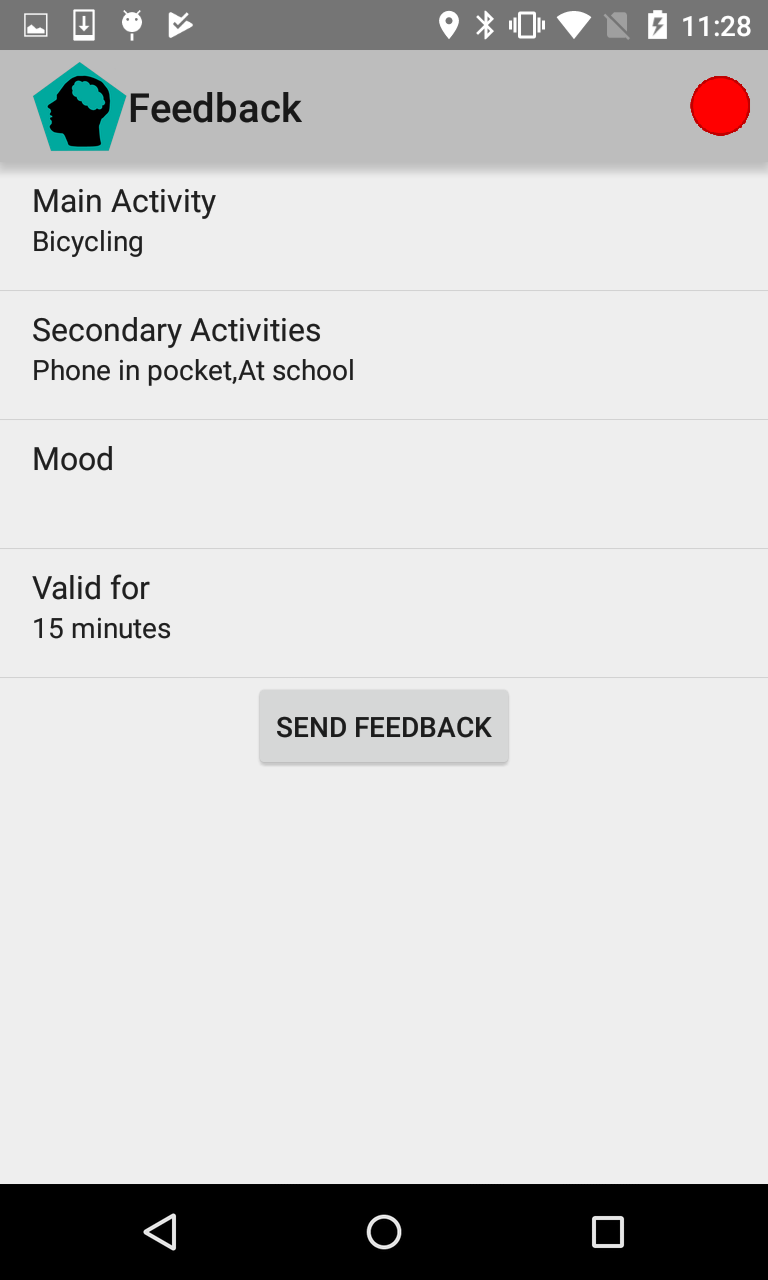

| Active feedback. The user can report the relevant context labels for the immediate future and report that the same labels will stay relevant for a selected amount of time (up to 30 minutes). |

|

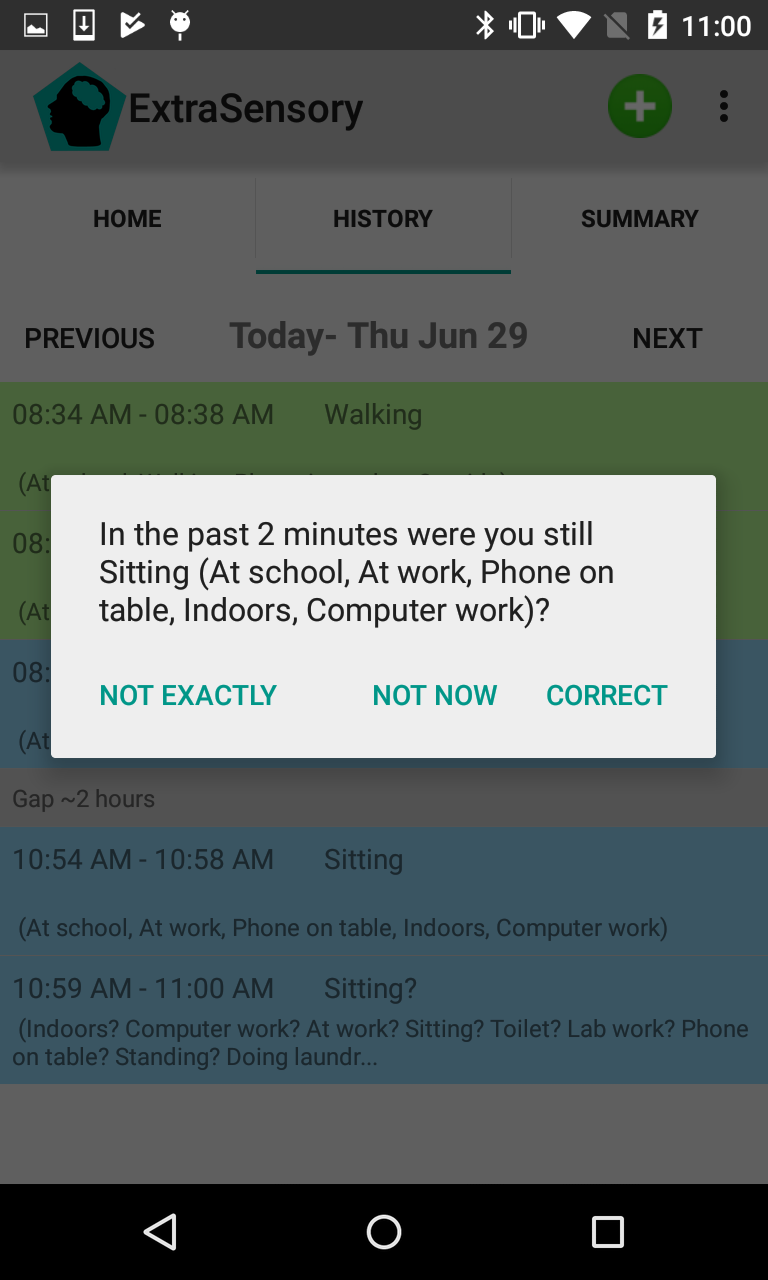

| Notifications. Periodically (every 20 minutes by default, but the user can set the interval) the app raises a notification to the user. In case no labels were reported in a while, the notification will ask the user to provide labels. In case the user reported labels recently, the notification will ask whether the context remained the same until now. |

|

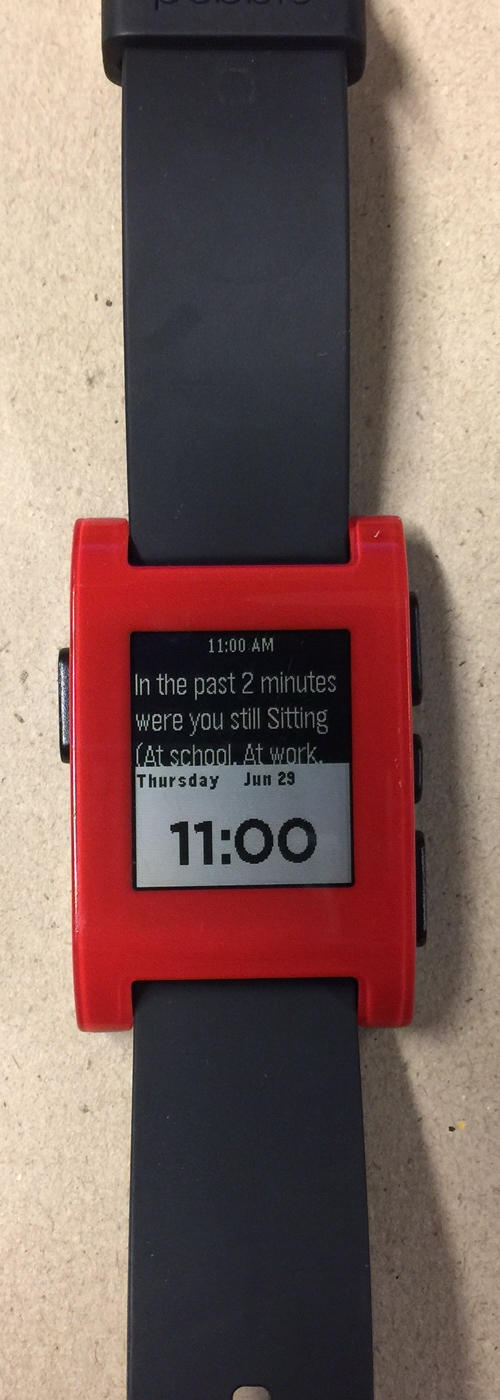

| Notifications - watch. The notification also appear on the face of the smartwatch, and if the context labels remain the same a simple click of a watch-button is sufficient to apply the same labels for all the recent minutes. |

|

The ExtraSensory App is publicly available for download for free at:

https://github.com/cal-ucsd/ExtraSensoryAndroid

You are welcome to clone the github repository, make your own improvements, contribute public source code, or develop other apps based on the app.

If you use the ExtraSensory App for your published work, you are required to cite our publication,

mentioned here as Vaizman2018a.

Notice! The ExtraSensory App collects sensitive/private data about whoever is using it and communicates it to a server.

So before you install the app on anyone's phone, you should be well-familiar with it and what it does.

First, read the documentation at ExtraSensory App guide.

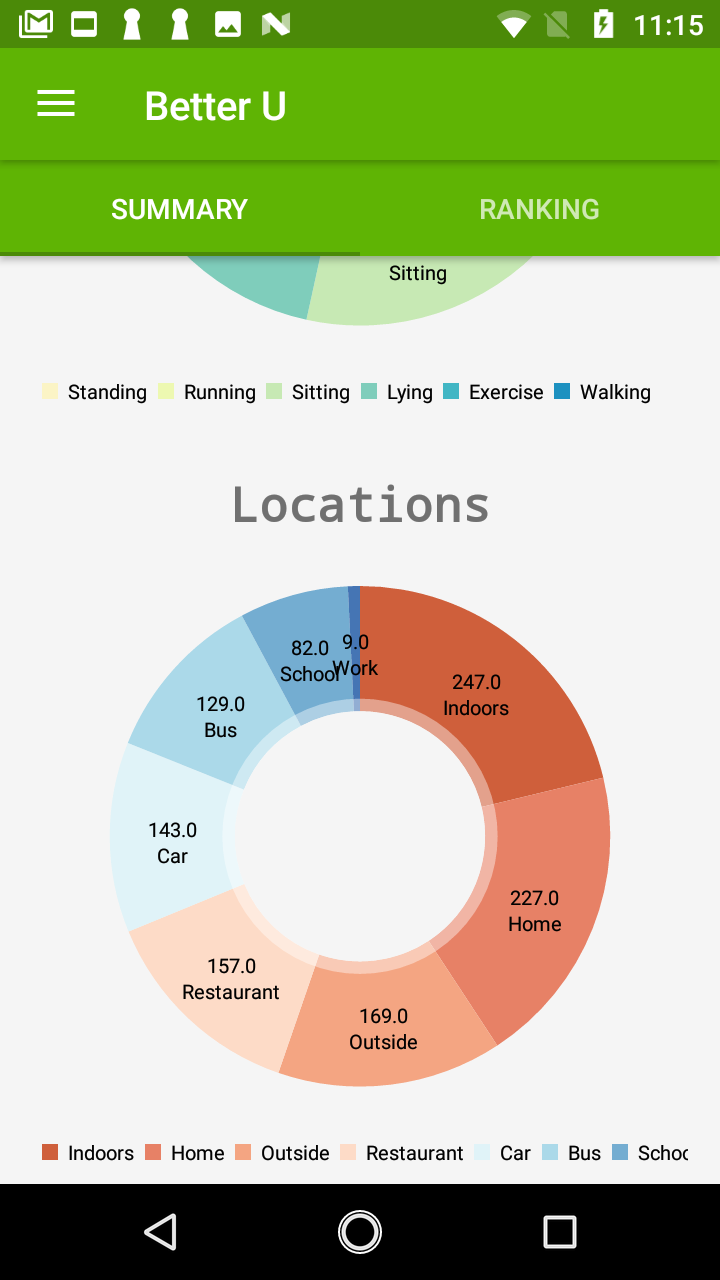

There are many domains that can benefit from automatic behavioral context recognition:

mobile health, aging care, personal assistant, recommendation systems, and more.

If you want to develop a mobile app that uses context-recognition, you can use the code of the ExtraSensory App,

and add more functionality or change the design based on that code.

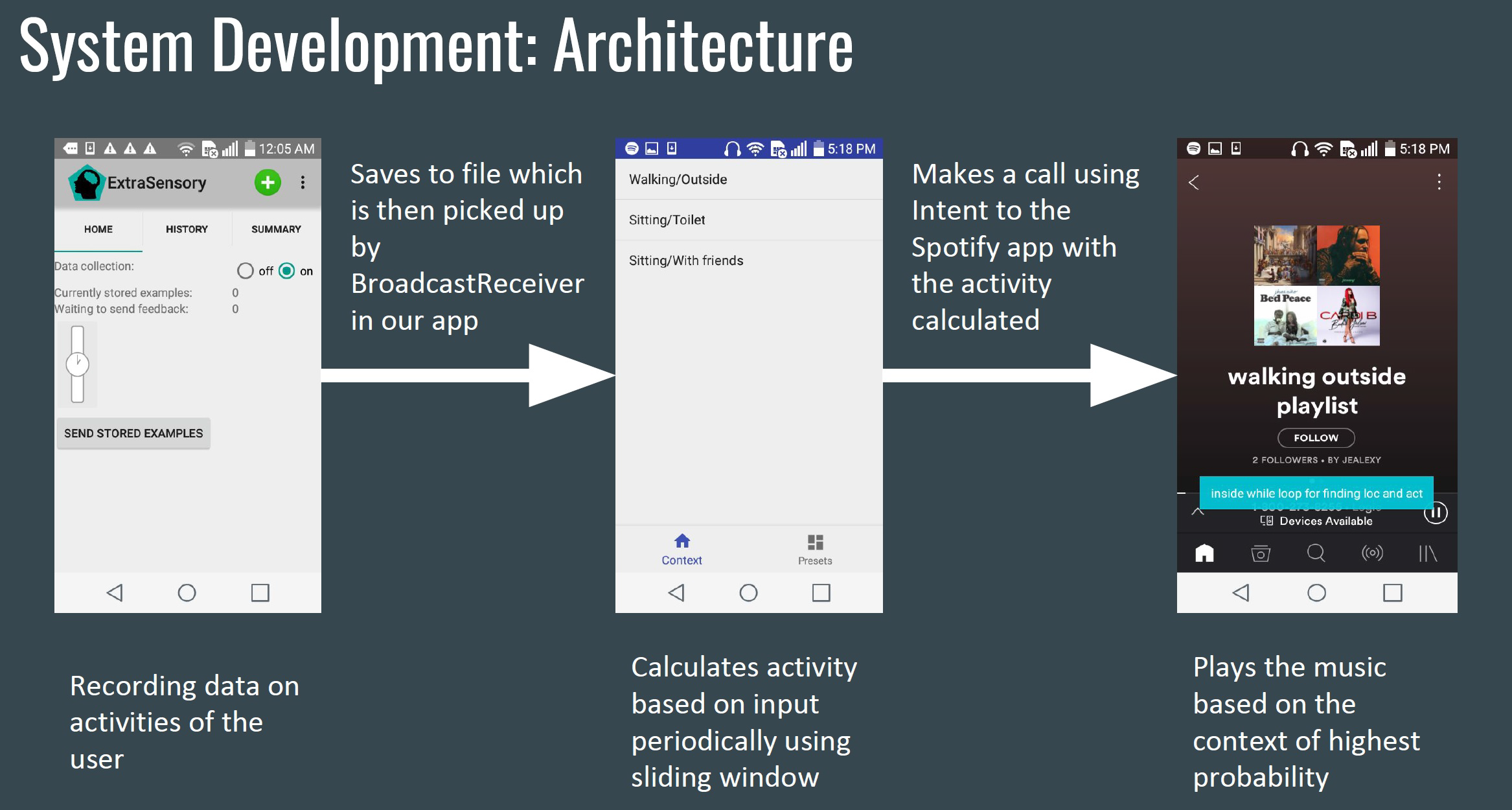

As an alternative, the ExtraSensory App allows you to use it as a black-box tool for context-recognition:

just keep it running in the background of the phone, it will save files with the recognized context for every recorded minute,

and you can develop your own app that reads these files and makes use of the recognized context to provide something useful or desirable to users.

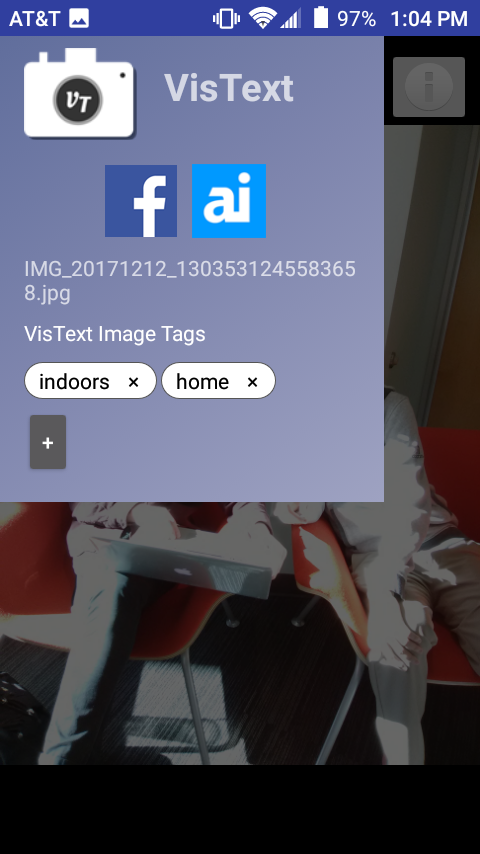

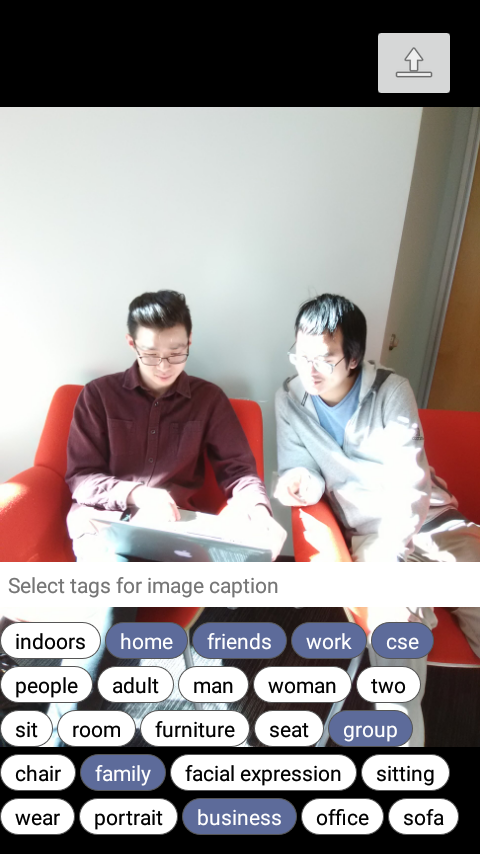

During Fall 2017, as part of prof. Nadir Weibel's class for Ubiquitous Computing in the University of California, San Diego, students developed mobile applications based on the ExtraSensory App. Some of the applications used analysis of longer term logging of behavior and others provided at-the-moment functionality that uses real-time context-recognition. Here are some examples:

| Vaizman2017a |

|

link (official) pdf (accepted) Supplementary |

||

| Vaizman2017b |

|

link (official) pdf (accepted) Supplementary |

||

| Vaizman2018a |

|

link (official) - To be published April 2018 |